Land Use

Land use is a category of computer vision (CV) classification algorithms in GO that operate on satellite imagery to classify land use (aka land cover) type in each part of your areas of interest. In layperson terms, the algorithm tells you where the buildings, roads, vegetation, etc are. By running multiple land use analyses over different periods of time, you can also identify change in terms of building / road construction, deforestation, etc.

Land Use Aggregation

Our land use algorithms "aggregate" analysis across multiple satellite images to deliver a single "aggregated" land use result. In land use analysis, a single satellite image is often insufficient to cover a large area of interest, and an individual image may have cloudy areas that are not usable.

As such, the land use algorithms are configured with a start and end date for which to select satellite imagery, as well as additional imagery selection parameters. It is best to select a date range of at least 1-3 months (or even up to 1 year), depending on the amount of satellite imagery available.

During the analysis, our land use computer vision algorithms are run on all satellite images from the selection step. Cloudy parts of each image are detected and discarded. The final aggregation process then combines valid results from multiple satellite images, allowing for a single cloud-free result that will cover your AOI.

The following table summarizes the generally available land use algorithms, in terms of resolution and output:

| Algorithm | Imagery Source | Applicability | Output Classes | Output Geometry |

|---|---|---|---|---|

| Land Use (1.5m) | Airbus SPOT 1.5m, color | Global, wide-area | Multi-Class (Buildings, Roads, Forest, Grass, Water, Other) | MultiPolygon |

| Land Use (3-5m) | Planet Dove 3-5m, color | Global, wide-area | Multi-Class (Buildings, Roads, Forest, Grass, Water, Mining, Golf Course, Parking Lot, Other) | MultiPolygon |

- Imagery Source: click here for more details on imagery data sources.

- Applicability: the areas and conditions under which the algorithms are expected to perform well.

- Output Classes: our land use algorithms output multi-class results

- Output Geometry: our land use algorithms output MultiPolygon geometries for each land use class

Global applicability

Our object detection algorithms are built to work globally, by using a training dataset that is geographically diverse. For example, the Cars algorithm is trained on satellite imagery from the Americas, Europe, Middle-East and Asia, so that it learns to recognize cars in different parts of the world (as the objects, as well as the background environment, may look different!)

Wide-area applicability

Algorithms that are applicable on a "wide-area" basis mean that they are trained to distinguish the relevant objects amidst a highly variable background in the satellite imagery. This means that you do not have to worry about limiting your AOIs only to areas where you would expect the objects to appear.

For example, if using the Cars algorithm, you do not have to worry about having individual carpark AOIs - simply analyze entire facilities / AOIs and the algorithm will automatically pick out the cars in the relevant spots.

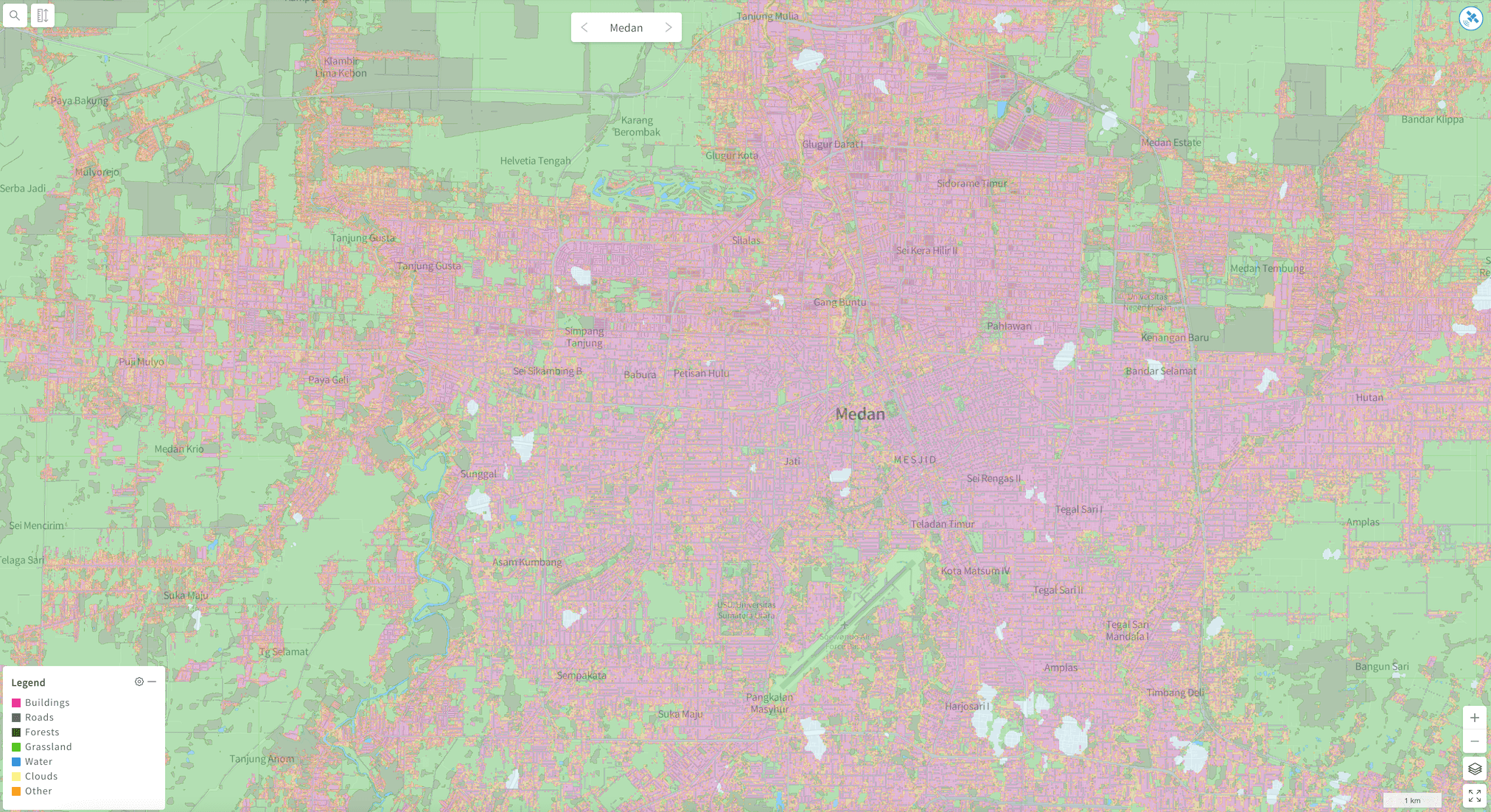

Land Use (1.5m)

Land Use (1.5m) results - Indonesian city of Medan

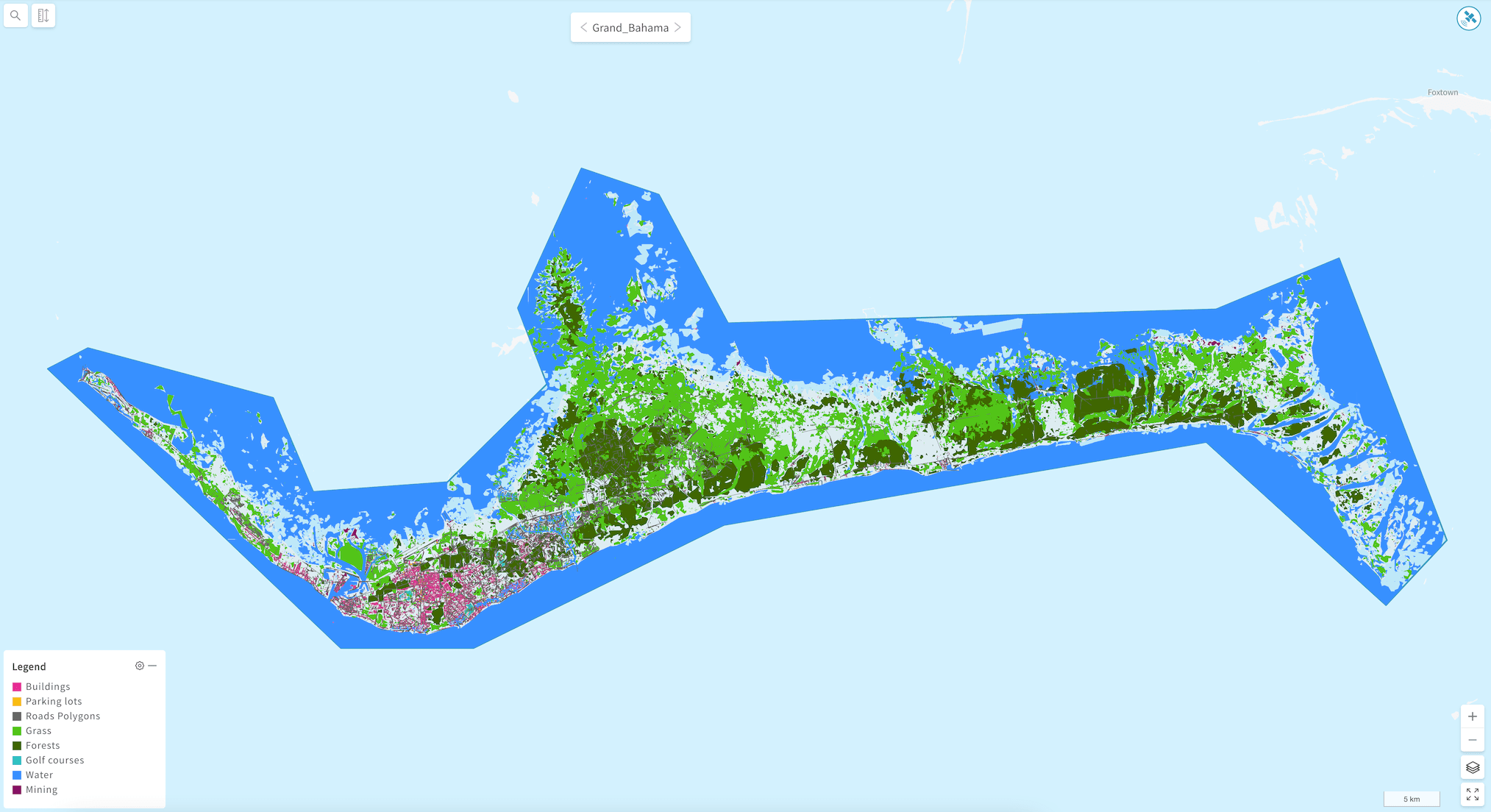

Land Use (3-5m)

Land Use (3-5m) results - Grand Bahama right before Hurricane Dorian

Updated about 3 years ago

Learn how to select imagery for your land use projects, and view results